I deal with a lot of servers at work, and one thing everyone wants to know about their servers is how close they are to being at max utilization. It should be easy, right? Just pull up top or another system monitor tool, look at network, memory and CPU utilization, and whichever one is the highest tells you how close you are to the limits.

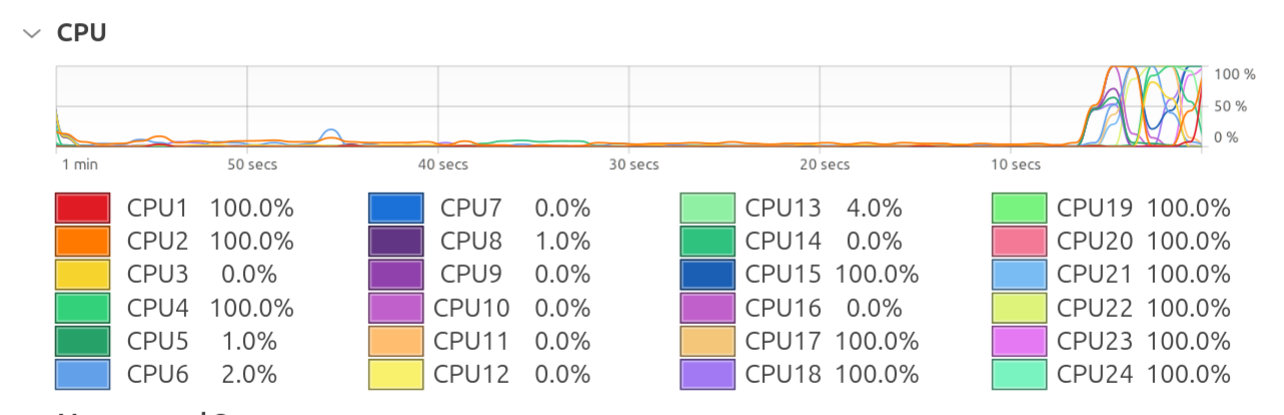

For example, this machine is at 50% CPU utilization, so it can probably do twice as much of whatever it's doing.

And yet, whenever people actually try to project these numbers, they find that CPU utilization doesn't quite increase linearly. But how bad could it possibly be?

To answer this question, I ran a bunch of stress tests and monitored both how much work they did and what the system-reported CPU utilization was, then graphed the results.

I vibe-coded a script that runs stress-ng in a loop, first using a worker for each core and attempting to run them each at different utilizations from 1% to 100%, then using 1 to N workers all at 100% utilization. It used different stress testing method and measured the number of operations that could be completed ("Bogo ops"). For my test machine, I used a desktop computer running Ubuntu with a Ryzen 9 5900X (24 core) processor. I also enabled Precision Boost Overdrive (i.e. Turbo).

The reason I did two different methods was that operating systems are smart about how they schedule work, and scheduling a small number of workers at 100% utilization can be done optimally (spoilers) but with 24 workers all at 50% utilization it's hard for the OS to do anything other than spreading the work evenly.

You can see the raw CSV results here.

The most basic test just runs all of stress-ng's CPU stress tests in a loop.

You can see that when the system is reporting 50% CPU utilization, it's actually doing 60-65% of the actual maximum work it can do.

But maybe that one was just a fluke. What if we just run some random math on 64-bit integers?

This one is even worse! At "50% utilization", we're actually doing 65-85% of the max work we can get done. It can't possibly get worse than that though, right?

Something is definitely off. Doing matrix math, "50% utilization" is actually 80% to 100% of the max work that can be done.

In case you were wondering about the system monitor screenshot from the start of the article, that was a matrix math test running with 12 workers, and you can see that it really did report 50% utilization even though additional workers do absolutely nothing (except make the utilization number go up).

You might notice that this the graph keeps changing at 50%, and I've helpfully added piecewise linear regressions showing the fit.

The main reason this is happening is hyperthreading: Half of the cores on this machine (and most machines) are sharing resources with other cores. If I run 12 workers on this machine, they each get scheduled on their own physical core with no shared resources, but once I go over that, each additional worker is sharing resources with another. In some cases (general CPU benchmarks), this makes things slightly worse, and in some cases (SIMD-heavy matrix math), there are no useful resources left to share.

It's harder to see, but Turbo is also having an effect. This particular processor runs at 4.9 GHz at low utilization, but slowly drops to 4.3 GHz as more cores become active.

Note the zoomed-in y-axis. The clock speed "only" drops by 15% on this processor.

Since CPU utilization is calculated as busy cycles / total cycles, this means the denominator is getting smaller as the numerator gets larger, so we get yet another reason why actual CPU utilization increases faster than linearly.

If you look at CPU utilization and assume it will increase linearly, you're going to have a rough time. If you're using the CPU efficiently (running above "50%" utilization), the reported utilization is an underestimate, sometimes significantly so.

And keep in mind that I've only shown results for one processor, but hyperthreading performance and Turbo behavior can vary wildly between different processors, especially from different companies (AMD vs Intel).

The best way I know to work around this is to run benchmarks and monitor actual work done:

- Benchmark how much work your server can do before having errors or unacceptable latency.

- Report how much work your server is currently doing.

- Compare those two metrics instead of CPU utilization.