There was what everyone agrees was a high quality critique of the timelines component of AI 2027, by the LessWrong user and Substack writer Titotal.

It is great to have thoughtful critiques like this. The way you get actual thoughtful critiques like this, of course, is to post the wrong answer (at length) on the internet, and then respond by listening to the feedback and by making your model less wrong.

This is a high-effort, highly detailed, real engagement on this section, including giving the original authors opportunity to critique the critique, and warnings to beware errors, give time to respond, shares the code used to generate the graphs, engages in detail, does a bunch of math work, and so on. That is The Way.

So, Titotal: Thank you.

I note up front that at least Daniel Kokotajlo has indeed adjusted his estimates, and has moved his median from ‘AI 2027’ to ‘AI 2028’ based on events since publication, and Eli’s revisions also push the estimates back a bit.

I also note up front that if you evaluated most statements made in the discourse (either non-worried AI forecasting, or AI in general, or more broadly) with this level of rigor, mostly you couldn’t because you’d hit ‘I made it up’ very quickly, but in other cases where someone is trying at least a little, in my experience the models fall apart a lot worse and a lot faster. No one has suggested ‘here is a better attempt to forecast the future and take the whole thing seriously’ that I consider to have a reasonable claim to that.

A lot of the disagreements come down to how much one should care about which calculations and graphs match past data how closely in different contexts. Titotal demands very strong adherence throughout. I think it’s good to challenge and poke at the gaps but this seems to in several places go too far.

Note that this section is about discourse rather than the model, so many of you can skip it.

While I once again want to say up front that I am very much thankful for the substance of this critique, it would also be great to have an equally thoughtful headline presentation of such critiques. That, alas, (although again, thanks for writing this!) we did not get.

It is called ‘A deep critique of AI 2027’s bad timeline model,’ one could simply not use the word ‘bad’ here and we would still know you have strong disagreements with it, and there is much similar talk throughout, starting with the title and then this, the first use of bold:

Titotal (formatting in original): The article is huge, so I focussed on one section alone: their “timelines forecast” code and accompanying methodology section. Not to mince words, I think it’s pretty bad.

I’m not full on ‘please reconsider your use of adjectives’ but, well, maybe? Here is an active defense of the use of the word ‘bad’ here:

Neel Nanda: I agree in general [to try and not call things bad], but think that titotal's specific use was fine. In my opinion, the main goal of that post was not to engage the AI 2027, which had already be done extensively in private but rather to communicate their views to the broader community.

Titles in particular are extremely limited, many people only read the title, and titles are a key way people decide whether to eat on, and efficiency of communication is extremely important.

The point they were trying to convey was these models that are treated as high status and prestigious should not be and I disagree that non-violent communication could have achieved a similar effect to that title (note, I don't particularly like how they framed the post, but I think this was perfectly reasonable from their perspective.)

I mean, yes, if the goal of the post was to lower the status and prestige of AI 2027 and to do so through people reading the title and updating in that way, rather than to offer a helpful critique, then it is true that the title was the best local way to achieve that objective, epistemic commons be damned. I would hope for a different goal?

There are more of these jabs, and a matching persistent attitude and framing, sprinkled throughout what is in its actual content an excellent set of critiques - I find much that I object to, but I think a good critique here should look like that. Most of your objections should be successfully answered. Others can be improved. This is all the system working as designed, and the assessments don’t match the content.

To skip ahead, the author is a physicist, which is great except that they are effectively holding AI 2027 largely to the standards of a physics model before they would deem it fit for anyone to use it make life decisions, even if this is ‘what peak modeling performance looks like.’

Except that you don’t get to punt the decisions, and Bayes Rule is real. Sharing one’s probability estimates and the reasons behind them is highly useful, and you can and should use that to help you make better decisions.

Tyler Cowen’s presentation of the criticism then compounds this, entitled ‘Modeling errors in AI doom circles’ (which is pejorative on multiple levels), calling the critique ‘excellent’ (the critique in its title calls the original ‘bad’), then presenting this as an argument for why this proves they should have… submitted AI 2027 to a journal? Huh?

Tyler Cowen: There is much more detail (and additional scenarios) at the link. For years now, I have been pushing the line of “AI doom talk needs traditional peer review and formal modeling,” and I view this episode as vindication of that view.

That was absurd years ago. It is equally absurd now, unless the goal of this communication is to lower the status of its subject.

This is the peer! This is the review! That is how all of this works! This is it working!

Classic ‘if you want the right answer, post the (ideally less) wrong one on the internet.’ The system works. Whereas traditional peer review is completely broken here.

Indeed, Titotal says it themselves.

Titotal: What makes AI 2027 different from other similar short stories is that it is presented as a forecast based on rigorous modelling and data analysis from forecasting experts. It is accompanied by five appendices of “detailed research supporting these predictions” and a codebase for simulations.

…

Now, I was originally happy to dismiss this work and just wait for their predictions to fail, but this thing just keeps spreading, including a youtube video with millions of views.

As in: I wasn’t going to engage with any of this until I saw it getting those millions of views, only then did I actually look at any of it.

Which is tough but totally fair, a highly sensible decision algorithm, except for the part where Titotal dismissed the whole thing as bogus before actually looking.

The implications are clear. You want peer review? Earn it with views. Get peers.

It is strange to see these two juxtaposed together. You get the detailed thoughtful critique for those who Read the Whole Thing. For those who don’t, at the beginning and conclusion, you get vibes.

Also (I discovered this after I’d finished analyzing the post) it turns out this person’s substack (called Timeline Topography Tales) is focused on, well, I’ll let Titotal explain, by sharing the most recent headlines and the relevant taglines in order, that appear before you click ‘see all’:

15 Simple AI Image prompts that stump ChatGPT

Slopworld 2035: The dangers of mediocre AI. None of this was written with AI assistance.

AI is not taking over material science (for now): an analysis and conference report. Confidence check: This is my field of expertise, I work in the field and I have a PhD in the subject.

A nerds guide to dating: Disclaimer: this blog is usually about debunking singularity nerds. This is not a typical article, nor is it my area of expertise.

The walled marketplace of ideas: A statistical critique of SSC book reviews.

Is ‘superhuman’ AI forecasting BS? Some experiments on the “539” bot from the Centre for AI Safety.

Most smart and skilled people are outside of the EA/rationalist community: An analysis.

I’m not saying this is someone who has an axe and is grinding it, but it is what it is.

Despite this, it is indeed a substantively excellent post, so LessWrong has awarded this post 273 karma as of this writing, very high and more than I’ve ever gotten in a single post, and 213 on the EA forum, also more than I’ve ever gotten in a single post.

Okay, with that out of the way up top, who wants to stay and Do Forecasting?

This tripped me up initially, so it’s worth clarifying up front.

The AI 2027 model has two distinct sources of superexponentiality. That it is why Titotal will later talk about there being an exponential model and a superexponential model, and then that there is a superexponential effect applied to both.

The first source is AI automation of AI R&D. It should be clear why this effect is present.

The second source is a reduction in difficulty of doubling the length or reliability of tasks, once the lengths in question pass basic thresholds. As in, at some point, it is a lot easier to go from reliably doing one year tasks to two year tasks, than it is to go from one hour to two hours, or from one minute to two minutes. I think this is true in humans, and likely true for AIs in the circumstances in question, as well. But you certainly could challenge this claim.

Okay, that’s out of the way, on to the mainline explanation.

Summarizing the breakdown of the AI 2027 model:

The headline number is the time until development of ‘superhuman coders’ (SC), that can do an AI researcher job 30x as fast and 30x cheaper than a human.

Two methods are used, ‘time horizon extension’ and ‘benchmarks and gaps.’

There is also a general subjective ‘all things considered.’

Titotal (matching my understanding): The time horizon method is based on 80% time horizons from this report, where the team at METR tried to compare the performance of AI on various AI R&D tasks and quantify how difficult they are by comparing to human researchers. An 80% “time horizon” of 1 hour would mean that an AI has an overall success rate of 80% on a variety of selected tasks that would take a human AI researcher 1 hour to complete, presumably taking much less time than the humans (although I couldn’t find this statement explicitly).

The claim of the METR report is that the time horizon of tasks that AI can do has been increasing at an exponential rate. The following is one of the graphs showing this progress: note the logarithmic scale on the y-axis:

Titoral warns that this report is ‘quite recent, not peer-reviewed and not replicated.’ Okay. Sure. AI comes at you fast, the above graph is already out of date and the o3 and Opus 4 (or even Sonnet 4) data points should further support the ‘faster progress recently’ hypothesis.

The first complaint is that they don’t include uncertainty in current estimates, and this is framed (you see this a lot) as one-directional uncertainty: Maybe the result is accurate, maybe it’s too aggressive.

But we don’t know whether or not this is the new normal or just noise or temporary bump where we’ll go back to the long term trend at some point. If you look at a graph of Moore’s law, for example, there are many points where growth is temporarily higher or lower than the long term trend. It’s the long term curve you are trying to estimate, you should be estimating the long term curve parameters, not the current day parameters.

This is already dangerously close to assuming the conclusion that there is a long term trend line (a ‘normal’), and we only have to find out what it is. This goes directly up against the central thesis being critiqued, which is that the curve bends when AI speeds up coding and AI R&D in a positive feedback loop.

There are three possibilities here:

We have a recent blip of faster than ‘normal’ progress and will go back to trend.

You could even suggest, this is a last gasp of reasoning models and inference scaling, and soon we’ll stall out entirely. You never know.

We have a ‘new normal’ and will continue on the new trend.

We have a pattern of things accelerating, and they will keep accelerating.

That’s where the whole ‘super exponential’ part comes in. I think the good critique here is that we should have a lot of uncertainty regarding which of these is true.

So what’s up with that ‘super exponential’ curve? They choose to model this as ‘each subsequent doubling time is 10% shorter than the one before.’ Titotal does some transformational math (which I won’t check) and draws curves.

Just like before, the initial time horizon H0 parameter is not subject to uncertainty analysis. What’s much more crazy here is that the rate of doubling growth, which we’ll call alpha, wasn’t subject to uncertainty either! (Note that this has been updated in Eli’s newest version). As we’ll see, the value of this alpha parameter is one of the most impactful parameters in the whole model, so it’s crazy that they didn’t model any uncertainty on it, and just pick a seemingly arbitrary value of 10% without explaining why they did so.

The central criticism here seems to be that there isn’t enough uncertainty, that essentially all the parameters here should be uncertain. I think that’s correct. I think it’s also a correct general critique of most timeline predictions, that people are acting far more certain than they should be. Note that this goes both ways - it makes it more likely things could be a lot slower, but also they could be faster.

What the AI 2027 forecast is doing is using the combination of different curve types to embody the uncertainty in general, rather than also trying to fully incorporate uncertainty in all individual parameters.

I also agree that this experiment shows something was wrong, and a great way to fix a model is to play with it until it produces a stupid result in some hypothetical world, then figure out why that happened:

Very obviously, having to go through a bunch more doublings should matter more than this. You wouldn’t put p(SC in 2025) at 5.8% if we were currently at fifteen nanoseconds. Changing the initial conditions a lot seems to break the model.

If you think about why the model sets up the way it does, you can see why it breaks. The hypothesis is that as AI improves, it gains the ability to accelerate further AI R&D progress, and that this may be starting to happen, or things might otherwise still go superexponential.

Those probabilities are supposed to be forward looking from this point, whereas we know they won’t happen until this point. It’s not obvious when we should have had this effect kick in if we were modeling this ‘in the past’ without knowing what we know now, but it obviously shouldn’t kick in before several minute tasks (as in, before the recent potential trend line changes) because the human has to be in the loop and you don’t save much time.

Thus, yes, the model breaks if you start it before that point, and ideally you would force the super exponential effects to not kick in until H is at least minutes long (with some sort of gradual phase in, presumably). Given that we were using a fixed H0, this wasn’t relevant, but if you wanted to use the model on situations with lower H0s you would have to fix that.

How much uncertainty do we have about current H0, at this point? I think it’s reasonable to argue something on the order of a few minutes is on the table if you hold high standards for what that means, but I think 15 seconds is very clearly off the table purely on the eyeball test.

Similarly, there is the argument that these equations start giving you crazy numbers if you extend them past some point. And I’d say, well, yeah, if you hit a singularity then your model outputting Obvious Nonsense is an acceptable failure mode. Fitting, even.

The next section asks for why we are using both super exponential curves in general, and this ‘super exponential’ curve in particular.

So, what arguments do they provide for superexponentiality? Let’s take a look, in no particular order:

Argument 1: public vs internal:

“The trend would likely further tilt toward superexponetiality if we took into account that the public vs. internal gap has seemed to decrease over time.

…

But even if we do accept this argument, this effect points to a slower growth rate, not a faster one.

I do think we should accept this argument, and also Titoral is correct on this one. The new curve suggests modestly slower progress.

The counterargument is that we used to be slowed down by this wait between models, in two ways.

Others couldn’t know about see, access, distill or otherwise follow your model while it wasn’t released, which previously slowed down progress.

No one could use the model to directly accelerate progress during the wait.

The counterargument to the counterargument is that until recently direct acceleration via using the model wasn’t a thing, so that effect shouldn’t matter, and mostly the trendline is OpenAI models so that effect shouldn’t matter much either.

I can see effects in both directions, but overall I do think within this particular context the slower direction arguments are stronger. We only get to accelerate via recklessly releasing new models once, and we’ve used that up now.

Slightly off topic, but it is worth noting that in AI 2027, this gap opens up again. The top lab knows that its top model accelerates AI R&D, so it does not release an up-to-date version not for safety but to race ahead of the competition, and to direct more compute towards further R&D.

This argument is that time doublings get easier. Going from being able to consistently string together an hour to a week is claimed to be a larger conceptual gap than a week to a year.

Titoral is skeptical of this for both AIs and humans, especially because we have a lot of short term tutorials and few long term ones.

I would say that learning how to do fixed short term tasks, where you follow directions, is indeed far easier than general ‘do tasks that are assigned’ but once you are past that phase I don’t think the counterargument does much.

I agree with the generic ‘more research is needed’ style call here. Basically everywhere, more research is needed, better understanding would be good. Until then, better to go with what you have than to throw up one’s hands and say variations on ‘no evidence,’ of course one is free to disagree with the magnitudes chosen.

In humans, I think the difficulty gap is clearly real if you were able to hold yourself intact, once you are past the ‘learn the basic components’ stage. You can see it in the extremes. If you can sustain an effort reliably for a year, you’ve solved most of the inherent difficulties of sustaining it for ten.

The main reasons ten is harder (and a hundred is much, much harder!) is because life gets in the way, you age and change, and this alters your priorities and capabilities. At some point you’re handing off to successors. There’s a lot of tasks where humans essentially do get to infinite task length if the human were an em that didn’t age.

With AIs in this context, aging and related concepts are not an issue. If you can sustain a year, why couldn’t you sustain two? The answer presumably is ‘compounding error rates’ plus longer planning horizons, but if you can use system designs that recover from failures, that solves itself, and if you get non-recoverable error rates either down to zero or get them to correlate enough, you’re done.

A recent speedup is quite weak evidence for this specific type of super exponential curve. As I will show later, you can come up with lots of different superexponential equations, you have to argue for your specific one.

That leaves the “scaling up agency training”. The METR report does say that this might be a cause for the recent speedup, but it doesn’t say anything about “scaling up agency training” being a superexponential factor. If agency training only started recently, could instead be evidence that the recent advances have just bumped us into a faster exponential regime.

Or, as the METR report notes, it could just be a blip as a result of recent advances: “But 2024–2025 agency training could also be a one-time boost from picking low-hanging fruit, in which case horizon growth will slow once these gains are exhausted”.

This seems like an argument that strictly exponential curves should have a very strong prior? So you need to argue hard if you want to claim more than that?

The argument that ‘agency training’ has led to a faster doubling curve seems strong. Of course we can’t ‘prove’ it, but the point of forecasting is to figure out our best projections and models in practice, not to pass some sort of theoretical robustness check, or to show strongly why things must be this exact curve.

Is it possible that this has ‘only’ kicked us into a new faster exponential? Absolutely, but that possibility is explicitly part of AI 2027’s model, and indeed earlier Titotal was arguing that we shouldn’t think that the exponential was likely to even have permanently altered, and they’re not here admitting that the mechanisms involved make this shift likely to be real.

I mention the ‘one time blip’ possibility above, as well, but it seems to me highly implausible that if it is a ‘blip’ that we are close to done with this. There is obviously quite a lot of unhobbling left to do related to agency.

Should superhuman AGIs have infinite time horizons? AI 2027 doesn’t fully endorse their argument on this, but I think it is rather obvious that at some point doublings are essentially free.

Titotal responds to say that an AI that could do extremely long time horizon CS tasks would be a superintelligence, to which I would tap the sign that says we are explicitly considering what would be true about a superintelligence. That’s the modeling task.

The other argument here, that given a Graham’s number of years (and presumably immortality of some kind, as discussed earlier) a human can accomplish quite an awful lot, well, yes, even if you force them not to do the obviously correct path of first constructing a superintelligence to do it for them. But I do think there’s an actual limit here if the human has to do all the verification too, an infinite number of monkeys on typewriters can write Shakespeare but they can’t figure out where they put it afterwards, and their fastest solution to this is essentially to evolve into humans.

Alternatively, all we’re saying is ‘the AI can complete arbitrary tasks so long as they are physically possible’ and at that point it doesn’t matter if humans can do them too, the metric is obviously not mapping to Reality in a useful way and the point is made.

Now if you read the justifications in the section above, you might be a little confused as to why they didn’t raise the most obvious justification for superexponentiality: the justification that as AI gets better, people will be able to use the AI for r&d research, thus leading to a feedback loop of faster AI development.

The reason for this that they explicitly assume this is true and apply it to every model, including the “exponential” and “subexponential” ones. The “exponential” model is, in fact, also superexponential in their model.

(Note: in Eli’s newest model this is substantially more complicated, I will touch on this later)

Titotal walks us through the calculation, which is essentially a smooth curve that speeds up progress based on feedback loops proportional to progress made towards a fully superhuman coder, implemented in a way to make it easily calculable and so it doesn’t go haywire on parameter changes.

Titotal’s first objection is that this projection implies (if you run the calculation backwards) AI algorithmic progress is currently 66% faster than it was in 2022, whereas Nikola (one of the forecasters) estimates current algorithmic progress is only 3%-30% faster, and the attempt to hardcode a different answer in doesn’t work, because relative speeds are what matters and they tried to change absolute speeds instead. That seems technically correct.

The question is, how much does this mismatch ultimately matter? It is certainly possible for the speedup factor from 2022 to 2025 to be 10% (1 → 1.1) and for progress to then accelerate far faster going forward as AI crosses into more universally useful territory.

As in, if you have an agent or virtual employee, it needs to cross some threshold to be useful at all, but after that it rapidly gets a lot more useful. But that’s not the way the model works here, so it needs to be reworked, and also yes I think we should be more skeptical about the amount of algorithmic progress speedup we can get in the transitional stages here, with the amount of progress required to get to SC, or both.

After walking through the curves in detail, this summarizes the objection to the lack of good fit for the past parts of the curve:

I assume the real data would mostly be within the 80% CI of these curves, but I don’t think the actual data should be an edge case of your model.

So, to finish off the “superexponential” the particular curve in their model does not match empirically with data, and as I argued earlier, it has very little conceptual justification either. I do not see the justification for assigning this curve 40% of the probability space.

I don’t think 75th percentile is an ‘edge case’ but I do agree that it is suspicious.

I think that the ‘super exponential’ curves are describing a future phenomena, for reasons that everyone involved understands, that one would not expect to match backwards in time unless you went to the effort of designing equations to do that, which doesn’t seem worthwhile here.

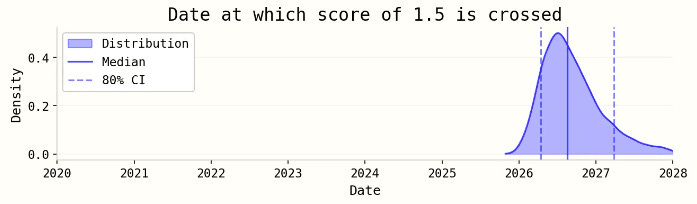

This is the graph in question, the issues with it are in the process of being addressed.

I agree that various aspects of this graph and how it was presented weren’t great, especially using a 15% easier-each-time doubling curve rather than the 10% that AI 2027 actually uses, and calling it ‘our projection.’ I do think it mostly serves the purpose of giving a rough idea what is being discussed, but more precision would have been better, and I am glad this is being fixed.

This objection is largely that there are only 11 data points (there are now a few more) on the METR curve, and you can fit it with curves that look essentially the same now but give radically different future outcomes. And yes, I agree, that is kind of the point, and if anything we are underrepresenting the uncertainty here, we can agree that even if we commit to using fully simplified and fully best-fit-to-the-past models we get a range of outcomes that prominently include 2028-2029 SCs.

I do think it is a reasonable to say that the super exponential curve the way AI 2027 set it up has more free variables than you would like when fitting 11 data points, if that’s all you were looking to do, but a lot of these parameters are far from free and are not being chosen in order to fit the past curve data.

We now move on to the second more complex model, which Titotal says in many ways is worse, because if you use a complicated model you have to justify the complications, and it doesn’t.

I think a better way to describe the 2nd model is, it predicts a transition in rate of progress around capabilities similar to saturation of re-bench, after which things will move at a faster pace, and uses the re-bench point as a practical way of simulating this.

Method 2 starts by predicting how long it would take to achieve a particular score (referred to as “saturation”) on Re-bench, a benchmark of AI skill on a group of ML research engineering tasks, also prepared by METR. After that, the time horizon extension model is used as with method 1, except that it starts later (when Re-bench saturates), and that it stops earlier (when a certain convoluted threshold is reached).

After that stopping point, 5 new gaps are estimated, which are just constants (as always, sampled from lognormal), and then the whole thing is run through an intermediate speedup model. So any critiques of model 1 will also apply to model 2, there will just be some dilution with all the constant gap estimates and the “re-bench” section.

The reason to start later is obvious, you can’t start actually using AI skill for ML research tasks until it can beat not using it. So what you actually have is a kind of ‘shadow curve’ that starts out super negative - if you tried to use AI to do your ML tasks in 2017 you’d very obviously do way worse than doing it yourself. Then at some point in the 2020s you cross that threshold.

We also need a top of the curve, because this is a benchmark and by its nature it saturates even if the underlying skills don’t. In some senses the top of the S-curve is artificial, in some it isn’t.

Titotal points out that you can’t meaningfully best-fit an S-curve until you know you’ve already hit the top, because you won’t know where the top is. The claim is that we have no idea where the benchmark saturates, that projecting it to be 2 is arbitrary. To which I’d say, I mean, okay, weird but if true who cares? If the maximum is 3 and we approach that a bit after we hit 2, then that’s a truth about the benchmark not about Reality, and nothing important changes. As I then realize Titotal noticed too that as long as you’re above human performance it doesn’t change things substantially, so why are we having this conversation?

This is a general pattern here. It’s virtuous to nitpick, but you should know when you’re nitpicking and when you’re not.

When you’re doing forecasting or modeling, you have to justify your decisions if and only if those decisions matter to the outcome. If it does not matter, it does not matter.

Speaking of doesn’t matter, oh boy does it not matter?

Step 2 is to throw this calculation in the trash.

I’m serious here. Look at the code. The variable t_sat_ci, the “CI for date when capability saturates”, is set by the forecaster, not calculated. There is no function related to the RE-bench data at all in the code. Feel free to look! It’s not in the updated code either.

…

Eli gives an 80% CI of saturation between september 2025 to january 2031, and Nikola gives an 80% CI of saturation between august 2025 and november 2026. Neither of these are the same as the 80% CI in the first of the two graphs, which is early 2026 to early 2027. Both distributions peak like half a year earlier than the actual Re-bench calculation, although Eli’s median value is substantially later.

Eli has told me that the final estimates for saturation time are “informed” by the logistic curve fitting, but if you look above they are very different estimates.

Those are indeed three very different curves. It seems that the calculation above is an intuition pump or baseline, and they instead go with the forecasters predictions, with Nikola expecting it to happen faster than the projection, and Eli having more uncertainty. I do think Nikola’s projection here seems unreasonably fast and I’d be surprised if he hasn’t updated by now?

Eli admits the website should have made the situation clear and he will fix it.

Titotal says we’ve ‘thrown out’ the re-bench part of the appendix. I say no, that’s not how this works, yes we’re not directly doing math with the output of the model above, but we are still projecting the re-bench results and using that to inform the broader model. That should have been made clear, and I am skeptical of Eli and Nikola’s graphs on this, especially the rapid sudden peak in Nikola’s, but the technique used is a thing you sometimes will want to do.

So basically we now do the same thing we did before except a lot starts in the future.

Titotal: Okay, so we’ve just thrown out the re-bench part of the appendix. What happens next? Well, next, we do another time horizons calculation, using basically the same methodology as in method 1. Except we are starting later now, so:

They guess the year that we hit re-bench saturation.

They guess the time horizon at the point we hit re-bench saturation.

They guess the doubling time at the point when we hit re-bench saturation.

They guess the velocity of R&D speedup at the point when we hit re-bench saturation.

Then, they use these parameters to do the time horizons calculation from part 1, with a lower cut-off threshold I will discuss in a minute.

And they don’t have a good basis for these guesses, either. I can see how saturating RE-bench could you give you some information about the time horizon, but not things like the doubling time, which is one of the most crucial parameters that is inextricably tied to long term trends.

Setting aside the cutoff, yes this is obviously how you would do it. Before we estimated those variables now. If you start in the future, you want to know what they look like as you reach the pivot point.

Presumably you would solve this by running your model forward in the previous period, the same way you did in the first case? Except that this is correlated with the pace of re-bench progress, so that doesn’t work on its own. My guess is you would want to assign some percent weight to the date and some percept to what it would look like on your median pivot date.

And the estimation of doubling time is weird. The median estimate for doubling time at re-bench saturation is around 3 months, which is 33% lower than their current estimate for doubling time. Why do they lower it?

Well, partly because under the superexponential model there would have been speedups during the re-bench saturation period.

Titotal then repeats the concern about everything being super exponential, but I don’t see the issue on this one, although I would do a different calculation to decide on my expectation here.

I also don’t understand the ‘this simulation predicts AI progress to freeze in place for two years’ comment, as in I can’t parse why one would say that there.

And now here’s where we come to a place where I actually am more concerned than Titotal is:

The other main difference is that this time horizons model only goes to a lower threshold, corresponding to when AI hits the following requirement:

“Ability to develop a wide variety of software projects involved in the AI R&D process which involve modifying a maximum of 10,000 lines of code across files totaling up to 20,000 lines. Clear instructions, unit tests, and other forms of ground-truth feedback are provided. Do this for tasks that take humans about 1 month (as controlled by the “initial time horizon” parameter) with 80% reliability, add the same cost and speed as humans.”

Despite differing by 2 orders of magnitude on the time horizon required for SC in the first method, when it comes to meeting this benchmark they are both in exact agreement for this threshold, which they both put as a median of half a month.

This is weird to me, but I won’t dwell on it.

I kind of want to dwell on this, and how they are selecting the first set of thresholds, somewhat more, since it seems rather important. I want to understand how these various disagreements interplay, and how they make sense together.

That’s central to how I look at things like this. You find something suspicious that looks like it won’t add up right. You challenge. They address it. Repeat.

I think I basically agree with the core criticism here that this consists of guessing things about future technologies in a way that seems hard to get usefully right, it really is mostly a bunch of guessing, and it’s not clear that this is complexity is helping the model be better than making a more generalized guess, perhaps using this as an intuition pump. I’m not sure. I don’t think this is causing a major disagreement in the mainline results, though?

In addition to updating the model, Eli responds with this comment.

I don’t understand the perspective that this is a ‘bad response.’ It seems like exactly how all of this should work, they are fixing mistakes and addressing communication issues, responding to the rest, and even unprompted offer a $500 bounty payment.

Eli starts off linking to the update to the model from May 7.

Here is Eli’s response on the ‘most important disagreements’:

Whether to estimate and model dynamics for which we don't have empirical data. e.g. titotal says there is "very little empirical validation of the model," and especially criticizes the modeling of superexponentiality as having no empirical backing. We agree that it would be great to have more empirical validation of more of the model components, but unfortunately that's not feasible at the moment while incorporating all of the highly relevant factors.[1]

Whether to adjust our estimates based on factors outside the data. For example, titotal criticizes us for making judgmental forecasts for the date of RE-Bench saturation, rather than plugging in the logistic fit. I’m strongly in favor of allowing intuitive adjustments on top of quantitative modeling when estimating parameters.

[Unsure about level of disagreement] The value of a "least bad" timelines model. While the model is certainly imperfect due to limited time and the inherent difficulties around forecasting AGI timelines, we still think overall it’s the “least bad” timelines model out there and it’s the model that features most prominently in my overall timelines views. I think titotal disagrees, though I’m not sure which one they consider least bad (perhaps METR’s simpler one in their time horizon paper?). But even if titotal agreed that ours was “least bad,” my sense is that they might still be much more negative on it than us. Some reasons I’m excited about publishing a least bad model:

Reasoning transparency. We wanted to justify the timelines in AI 2027, given limited time. We think it’s valuable to be transparent about where our estimates come from even if the modeling is flawed in significant ways. Additionally, it allows others like titotal to critique it.

Advancing the state of the art. Even if a model is flawed, it seems best to publish to inform others’ opinions and to allow others to build on top of it.

My read, as above, is that titotal indeed objects to a ‘least bad’ model if it is presented in a way that doesn’t have ‘bad’ stamped all over it with a warning not to use it for anything. I am strongly with Eli here. I am also with Thane that being ‘least bad’ is not on its own enough, reality does not grade on a curve and you have to hit a minimum quality threshold to be useful, but I do think they hit that.

As discussed earlier, I think #1 is also an entirely fair response, although there are other issues to dig into on those estimates and where they come from.

The likelihood of time horizon growth being superexponential, before accounting for AI R&D automation. See this section for our arguments in favor of superexponentiallity being plausible, and titotal’s responses (I put it at 45% in our original model). This comment thread has further discussion. If you are very confident in no inherent superexponentiality, superhuman coders by end of 2027 become significantly less likely, though are still >10% if you agree with the rest of our modeling choices (see here for a side-by-side graph generated from my latest model).

How strongly superexponential the progress would be. This section argues that our choice of superexponential function is arbitrary. While we agree that the choice is fairly arbitrary and ideally we would have uncertainty over the best function, my intuition is that titotal’s proposed alternative curve feels less plausible than the one we use in the report, conditional on some level of superexponentiality.

Whether the argument for superexponentiality is stronger at higher time horizons. titotal is confused about why there would sometimes be a delayed superexponential rather than starting at the simulation starting point. The reasoning here is that the conceptual argument for superexponentiality is much stronger at higher time horizons (e.g. going from 100 to 1,000 years feels likely much easier than going from 1 to 10 days, while it’s less clear for 1 to 10 weeks vs. 1 to 10 days). It’s unclear that the delayed superexponential is the exact right way to model that, but it’s what I came up with for now.

I don’t think 3b here is a great explanation, as I initially misunderstood it, but Eli has clarified that its intent matches my earlier statements about ease of shifting to longer tasks being clearly easier at some point past the ‘learn the basic components’ stage. Also I worry this does drop out a bunch of the true objections, especially the pointing towards multiple different sources of superexponentiallity (we have both automation of AI R&D and a potential future drop in the difficulty curve of tasks), which he lists under ‘other disagreements’ and says he hasn’t looked into yet - I think that’s probably the top priority to look at here at this point. I find the ‘you have to choose a curve and this seemed like the most reasonable one’ response to be, while obviously not the ideal world state, in context highly reasonable.

He then notes two other disagreements and acknowledges three mistakes.

Eli released an update in response to a draft of the Titotal critiques.

The new estimates are generally a year or two later, which mostly matches the updates I’d previously seen from Daniel Kokotajlo. This seems like a mix of model tweaks and adjusting for somewhat disappointing model releases over the last few months.

Overall Titotal is withholding judgment until Eli writes up more about it, which seems great, and also offers initial thoughts. Mostly he sees a few improvements but doesn’t believe his core objections are addressed.

Titotal challenges the move from 40% chance of super exponential curves to a 90% chance of an eventual such curve, although Eli notes that the 90% includes a lot of probability put into very large time horizon levels and thus doesn’t impact the answer that much.I see why one would generally be concerned about double counting, but I believe that I understand this better now and they are not double counting.

Titotal wraps up by showing you could draw a lot of very distinct graphs that ‘fit the data’ where ‘the data’ is METR’s results. And yes, of course, we know this, but that’s not the point of the exercise. No, reality doesn’t ‘follow neat curves’ all that often, but AI progress remarkably often has so far, and also we are trying to create approximations and we are all incorporating a lot more than the METR data points.

If you want to look at Titotal’s summary of why bad thing is bad, it’s at this link. I’ve already addressed each of these bullet points in detail. Some I consider to point to real issues, some not so much.

What is my overall take on the right modeling choices?

Simplicity is highly valuable. As the saying goes, make everything as simple as possible, but no simpler. There’s a lot to be said for mostly relying on something that has the shape of the first model, with the caveat of more uncertainty in various places, and that the ‘superexponential’ effects have an uncertain magnitude and onset point. There are a few different ways you could represent this. If I was doing this kind of modeling I’d put a lot more thought into the details than I have had the chance to do.

I would probably drop the detailed considerations of future bottlenecks and steps from the ultimate calculation, using them more as an intuition pump, the same way they currently calculate re-bench times and then put the calculation in the trash (see: plans are worthless, planning is essential.)

If I was going to do a deep dive, I would worry about whether we are right to combine these different arguments for superexponential progress, as in both AI R&D feedback loops and ease of future improvements, and whether either or both of them should be incorporated into the preset trend line or whether they have other issues.

The final output is then of course only one part of your full model of Reality.

At core, I buy the important concepts as the important concepts. As in, if I was using my own words for all this:

AI progress continues, although a bit slower than we would have expected six months ago - progress since then has made a big practical difference, it’s kind of hard to imagine going back to models of even six months ago, but proper calibration means that can still be disappointing.

In addition to scaling compute and data, AI itself is starting to accelerate the pace at which we can make algorithmic progress in AI. Right now that effect is real but modest, but we’re crossing critical thresholds where it starts to make a big difference, and this effect probably shouldn’t be considered part of the previous exponentials.

The benefit of assigning tasks to AI starts to take off when you can reliably assign tasks for the AI without needing continuous human supervision, and now can treat those tasks as atomic actions not requiring state.

If AI can take humans out of the effective loops in this research and work for more extended periods, watch the hell out (on many levels, but certainly in terms of capabilities and algorithmic progress.)

Past a certain point where you can reliably do what one might call in-context atomic components, gaining the robustness and covering the gaps necessary to do this more reliably starts to get easier rather than harder, relative to the standard exponential curves.

This could easily ‘go all the way’ to SC (and then quickly to full ASI) although we don’t know that it does. This is another uncertainty point, also note that AI 2027 as written very much involves waiting for various physical development steps.

Thus, without making any claims about what the pace of all this is (and my guess is it is slower than they think it is, and also highly uncertain), the Baseline Scenario very much looks like AI 2027, but there’s a lot of probability mass also on other scenarios.

One then has to ask what happens after you get this ‘superhuman coder’ or otherwise get ASI-like things of various types.

Which all adds up to me saying that I agree with Eli that none of the criticisms raised here challenges, to me, the ultimate or fundamental findings, only the price. The price is of course what we are here to talk about, so that is highly valuable even within relatively narrow bands (2028 is very different from 2029 because of reasons, and 2035 is rather different from that, and so on).

I realize that none of this is the kind of precision that lets you land on the moon.

The explanation for all this is right there: This is a physicist, holding forecasting of AI timelines to the standards of physics models. Well, yeah, you’re not going to be happy. If you try to use this to land on the moon, you will almost certainly miss the moon, the same way that if you try to use current alignment techniques on a superintelligence, you will almost certainly miss and then you will die.

One of the AI 2027 authors joked to me in the comments on a recent article that “you may not like it but it's what peak AI forecasting performance looks like”.

Well, I don’t like it, and if this truly is “peak forecasting”, then perhaps forecasting should not be taken very seriously.

Maybe this is because I am a physicist, not a Rationalist. In my world, you generally want models to have strong conceptual justifications or empirical validation with existing data before you go making decisions based off their predictions: this fails at both.

Yes, in the world of physics, things work very differently, and we have much more accurate and better models. If you want physics-level accuracy in your predictions of anything that involves interactions of humans, well, sorry, tough luck. And presumably everyone agrees that you can’t have a physics-quality model here and that no one is claiming to have one? So what’s the issue?

The issue is whether basing decisions on modeling attempts like this is better than basing them on ‘I made it up’ or not having probabilities and projections at all and vibing the damn thing.

What I’m most against is people taking shoddy toy models seriously and basing life decisions on them, as I have seen happen for AI 2027.

…

I am not going to propose an alternate model. If I tried to read the tea leaves of the AI future, it would probably also be very shaky. There are a few things I am confident of, such as a software-only singularity not working and that there will be no diamondoid bacteria anytime soon. But these beliefs are hard to turn into precise yearly forecasts, and I think doing so will only cement overconfidence and leave people blindsided when reality turns out even weirder than you imagined..

Why is this person confident the software-only singularity won’t work? This post does not say. You’d have to read their substack, I assume it’s there.

The forecast here is ‘precise’ in the sense that it has a median, and we have informed people of that median. It is not ‘precise’ in the sense of putting a lot of probability mass on that particular median, even as an entire year, or even in the sense that the estimate wouldn’t change with more work or better data. It is precise in the sense that, yes, Bayes Rule is a thing, and you have to have a probability distribution, and it’s a lot more useful to share it than not share it.

I do find that the AI 2027 arguments updated me modestly towards a faster distribution of potential outcomes. I find 2027 to be a totally plausible time for SC to happen, although my median would be substantially longer.

You can’t ‘not base life decisions’ on information until it crosses some (higher than this) robustness threshold. Or I mean you can, but it will not go great.

In conclusion, I once again thank Titotal for the excellent substance of this critique, and wish it had come with better overall framing.