Real-Time, Court-Admissible Crypto Intelligence at 1/400th the Cost of Inferior Legacy Systems

The explosion of blockchain data isn’t just a challenge; it’s a crisis for conventional analytics. Financial institutions, investigators, and law enforcement agencies are hamstrung by tools that are too slow, expensive, and built on legacy database technologies incapable of keeping pace. Critical insights are missed, opportunities vanish, and illicit activities remain obscured by systems that offer only a shallow view of the complex reality.

At Caudena, we didn’t just tweak an existing solution; we architected CashflowD (CFD) from the ground up, a proprietary in-memory database and JIT-compiling query engine built in modern C++. The result is a 200X to 400X reduction in infrastructure costs. Our competitors’ capabilities are significantly inferior and cannot produce the same depth of analysis. We deliver true real-time analytics, with some queries returning in sub-millisecond timeframes – often bottlenecked only by network latency. This isn’t an incremental improvement; it’s a paradigm shift that unlocks previously impossible analytical capabilities, provides court-admissible evidence, and fundamentally changes the economics of blockchain intelligence, allowing us to empower organizations to outmaneuver and outperform all existing solutions.

CFD is a cryptocurrency analytics engine meticulously designed to process blockchain data at scale, perform sophisticated address clustering with verifiable, court-admissible paths, conduct in-depth investigations, and assign robust, interpretable risk scores. This solution is engineered for those who demand complex analysis of the blockchain, involving massive operations on its ever-expanding data — from financial institutions and compliance teams to investigators facing down sophisticated adversaries. The architecture of CFD doesn’t just handle vast data volumes; it crushes them, especially critical as blockchains like Solana already exceed 400TB and are projected to reach 1 Petabyte within a year, rendering less efficient systems economically unviable.

Under the Hood: The Architecture of Unrivaled Speed, Power, and Efficiency

The “magic” behind CFD isn’t magic at all; it’s the result of deep C++ expertise, a first-principles approach to data management, and an obsession with performance that leaves competitors in the dust.

1. The In-Memory C++ Core: Where Every Nanosecond Counts

Conventional tools hit a wall with I/O and struggle with data volume. We demolished that wall and built a highway.

The entirety of the relevant blockchain data processed by CFD resides in RAM, processed by our custom C++ engine. This eliminates I/O bottlenecks entirely, forming the bedrock of our sub-second query capabilities. However, “in-memory” alone isn’t enough; it’s how we manage that memory that sets us apart, especially when dealing with Petabyte-scale projections.

Highly Optimized Custom Storage: We don’t rely on off-the-shelf databases. CFD utilizes an in-memory storage system, intelligently mapping less-frequently accessed data to disk when necessary, ensuring RAM is prioritized for active computation. This allows transaction processing and the time to incorporate new blocks and addresses to be extraordinarily fast.

Aggressive Data Packing & Representation: Every bit per transaction is worth saving when dealing with billions of transactions and terabytes of data. Our data is packed to reduce size – sometimes aligned for CPU cache efficiency, sometimes stored in variable-length structures. We employ small-vector optimizations where sensible and even advanced techniques like pointer tagging. Standard Linux pointers are 48 bits, leaving 16 (or even 17 user-space) upper bits free. We leverage this, for instance, by reserving bits to treat a value in a special way – if a specific bit is set, the value might be interpreted as a pointer to a larger 128-bit value, or an index into a specialized dictionary, avoiding the need to use (say) 16 bits for values when 8 bits cover 99.99% of cases. This attention to data representation is how we manage to hold and process colossal datasets entirely in-memory.

Consider this illustrative C++ snippet, showcasing our philosophy of deriving properties from an object’s memory alignment to wring every ounce of performance from the hardware:

struct OddEven { /* ... members ... */ bool is_even() const; };

bool OddEven::is_even() const {

return (reinterpret_cast<uintptr_t>(this) / sizeof(OddEven)) % 2 == 0;

}

// Or for block-aligned items:

size_t Item::get_index() const {

return reinterpret_cast<uintptr_t>(this) % block_alignment / sizeof(Item);

}

Securing such block alignment can be achieved by acquiring block_alignment * 2 memory (e.g., via mmap with PROT_NONE) and then unmapping extra pages. This meticulous, low-level engineering is pervasive throughout CFD.

Data Structures (HAMTs & More): Standard associative containers like std::unordered_map couldn’t meet our demands for memory efficiency and raw speed at the scale of billions of data points. We developed custom Hash Array Mapped Tries (HAMTs), utilizing BitArrayMaps (more on this later) internally and custom allocators. This gives us near flat-map memory density with sparse-hash-map performance – crucial for managing billions of data points. Our custom allocators even allow the use of memory pools and disk-backed shared memory, enabling seamless synchronization to disk, memory reclamation, and write/read protection for enhanced stability. This level of optimization is simply unattainable with off-the-shelf database solutions and is fundamental to our performance and cost-efficiency.

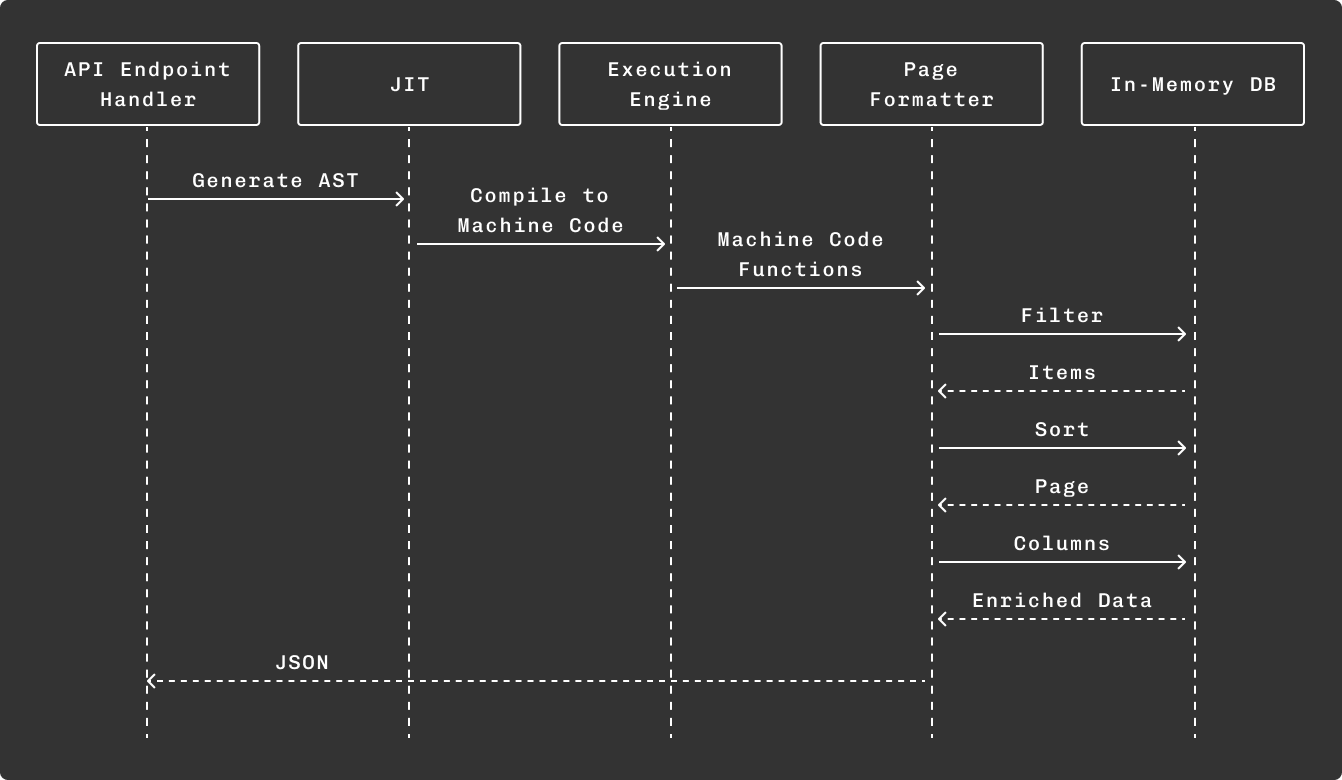

2. The JIT Compilation: Ask Any Question, Get Real-Time, Verifiable Answers

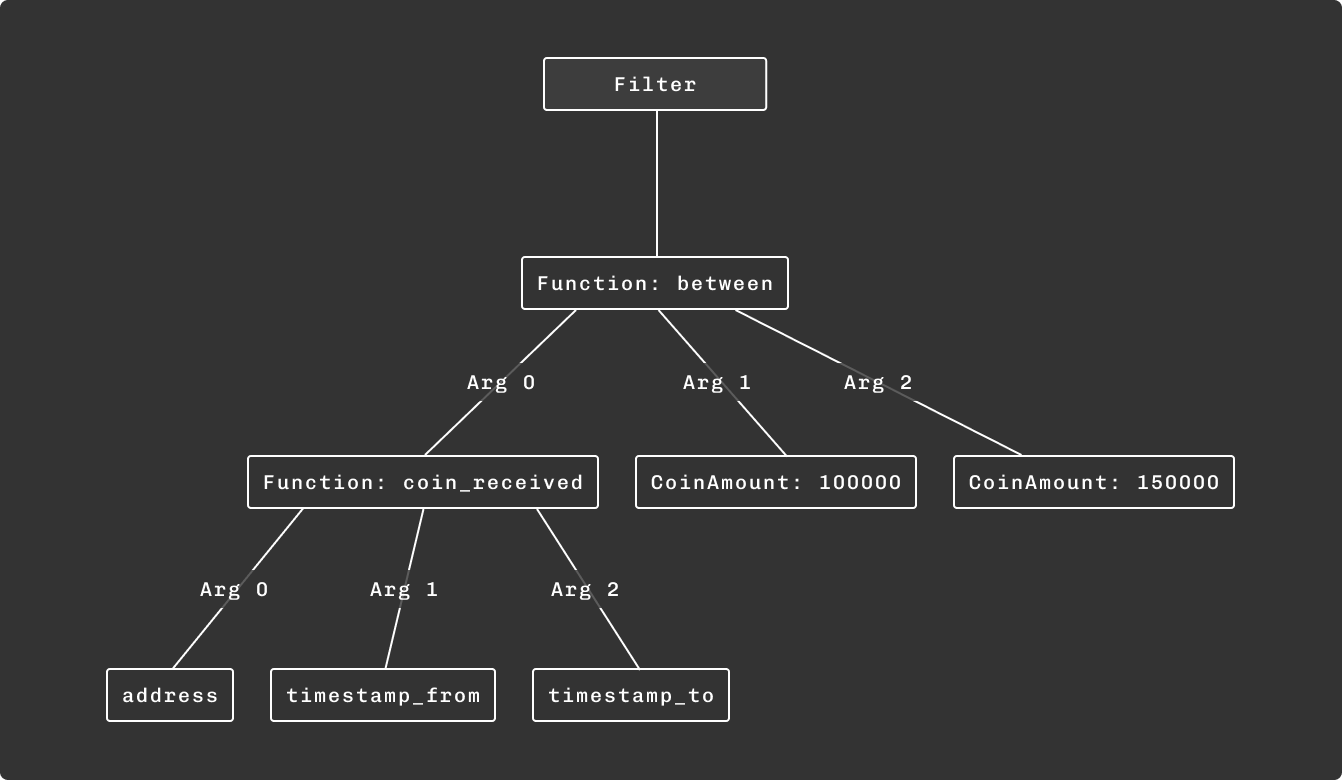

Static queries are limiting. Pre-canned analytics only go so far and often lack transparency. CFD empowers analysts with JIT-compilation of arbitrary user expressions, transforming complex, ad-hoc queries into optimized machine code on the fly.

LLVM ORCv2 Integration: Our JIT engine, built with LLVM’s ORCv2, offers greater flexibility and allows the use of weak linkage. It isn’t just a bolt-on; it’s deeply integrated. JIT-compiled queries can directly call highly optimized C++ core functions with minimal overhead, avoiding the abstraction penalties common in other systems and squeezing maximum performance from the metal. The precompiled headers help to achieve performance good enough to let the queries use templates and inline functions. Some internal features once introduced become immediately available through them, the rest will only need the symbols to be exposed.

This dynamic query capability allows analysts to ask questions of the data that were previously too computationally expensive to even consider. Imagine crafting a novel, complex filter for specific transactional patterns across terabytes of data and getting results in moments, not days. That’s the power CFD’s JIT engine unlocks, providing insights far deeper than any competitor.

When Compiling is “Too Slow” (Handling Thousands of RPS): For certain high-throughput scenarios, like our BFS filter handling thousands of requests per second with different custom expressions, full JIT compilation for each request can introduce latency. Here, we pragmatically employ an old-school approach: a simple LL-grammar parsed with boost::spirit builds lightweight ASTs, covering most high-frequency needs with minimal overhead. This demonstrates our adaptability in choosing the right tool for the job, always prioritizing performance.

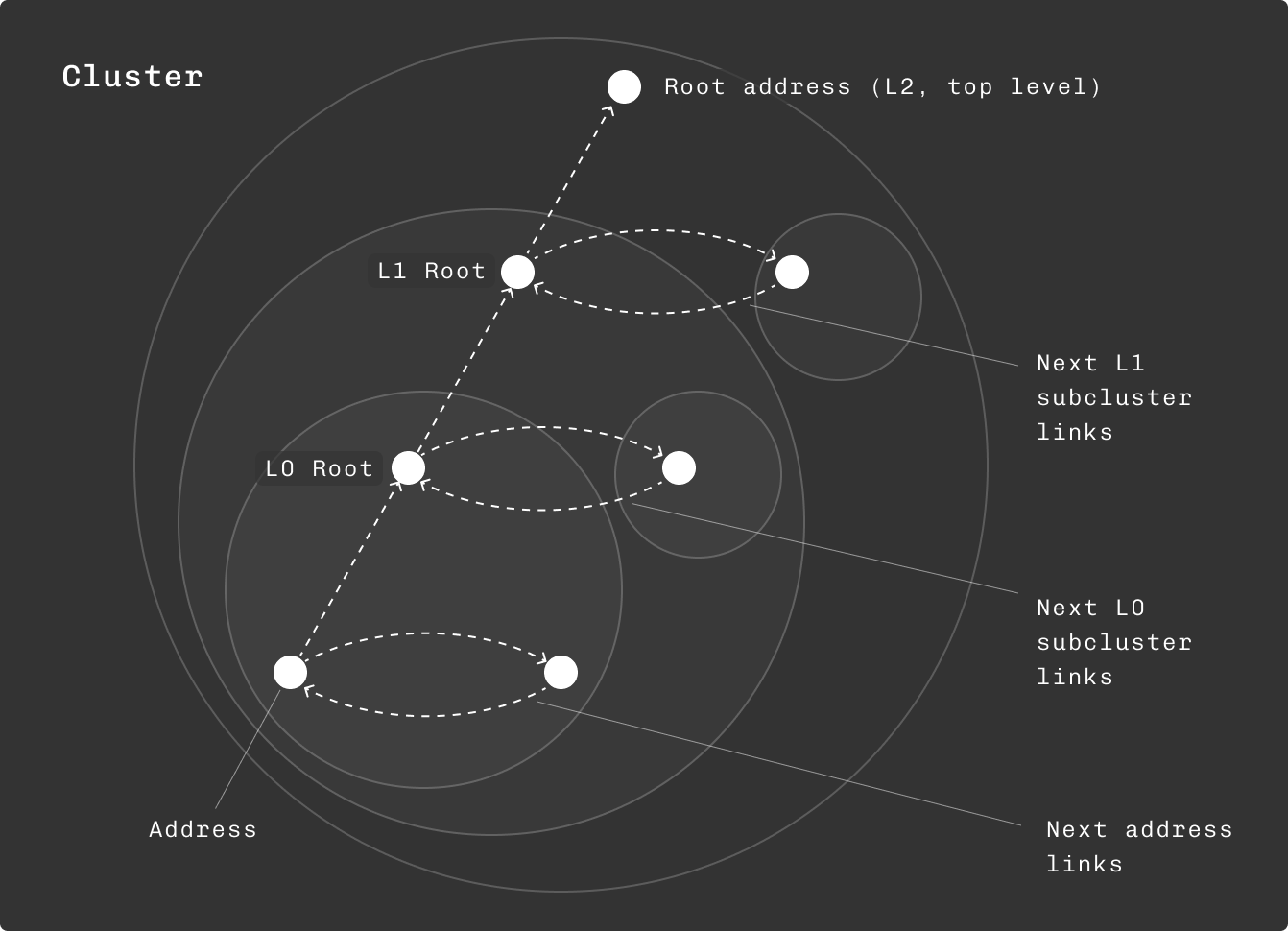

3. Intelligent Clustering & Reclustering: Real-Time, Court-Admissible Insights

Understanding relationships in blockchain data requires sophisticated clustering. Doing it in real-time, deterministically, and with full interpretability for evidentiary purposes is where CFD establishes its dominance.

Deterministic & Court-Admissible Clustering: We engineered our clustering algorithms to be not only fast but also deterministic (yielding the same results regardless of start height or data ingestion order) and fully interpretable. Crucially for Law Enforcement and regulatory bodies, CFD provides the exact clustering path via API request, detailing precisely why any given address was included in a cluster. This transparency and verifiability make our data court-admissible, a critical differentiator.

Multi-Level Confidence: Addresses are aggregated into clusters and subclusters based on varying levels of confidence.

O(1) Cluster Joins: All addresses of a cluster form a circular single-linked list. By simply swapping the ‘next’ links, such a list can be split in two or joined with another in O(1) time complexity. This enables joining entire clusters in O(1), allowing for near-instantaneous updates to massive clusters as new data arrives – a feat beyond the reach of traditional graph databases or batch-oriented systems.

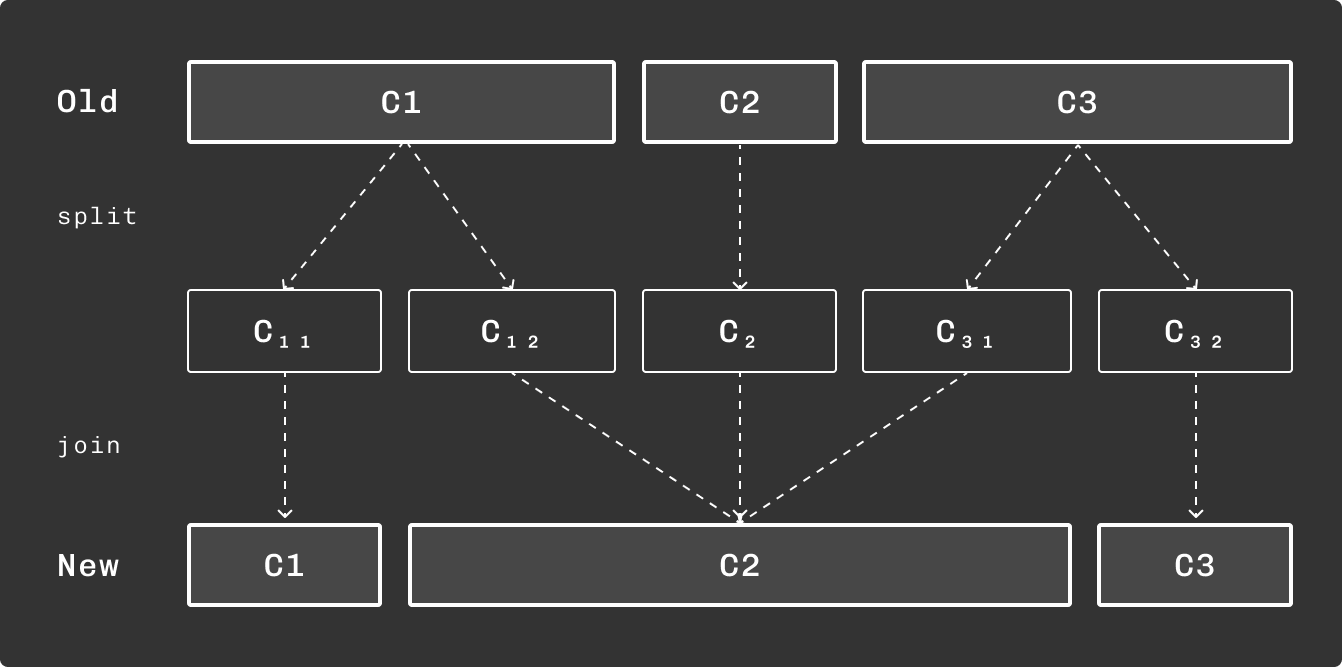

Dynamic Reclustering with Overlay Forest: To handle reclustering with precision (e.g., when a new heuristic is applied or an error corrected), we introduced the Overlay Forest. This ‘diff’ layer allows us to identify exact changes between old and new clustering states. By analyzing this overlay (a patch of sorts), we determine the affected clusters and generate split-and-join transformations. These updates to cluster statistics and caches are then efficiently parallelized, ensuring data consistency and speed.

The most effective approach for cache updates post-reclustering is to first split clusters into parts that match in both original and overlay forests, and then perform the necessary joins.

4. Resilient & Instantaneous Scoring

Identifying risk is paramount. Our scoring model is designed to be robust, interpretable, and resistant to common money laundering tactics – providing a level of sophistication competitors can’t match.

Resistant to Money-Laundering: We keep risks “sticky.” Unlike simple PageRank-style algorithms where scores can be diluted through complex laundering networks (peel chains, structuring, layering), our proprietary approach ensures that the original risk signature doesn’t dissolve, no matter how many hops or loops are involved.

Mathematically Robust Propagation: Scores and categories are initially assigned to identified clusters and then propagated to unidentified counterparties. For both incoming and outgoing scores, and for every score subcategory, we solve the equation MS=S.

M is a stochastic [NxN] matrix where N is the number of clusters plus special sources (mining, issuance, etc.). S is the vector of scores. This means the category share is a weighted average.

A straightforward solution is computationally prohibitive. We first ensure Mi has at least one element (adding tiny flows from an undefined source or excluding isolated components if necessary, to avoid infinite solutions). Then, we solve MS=S iteratively. While M is a right stochastic matrix (no eigenvalues > 1), convergence like in Markov chains isn’t guaranteed without specific conditions.

Efficient Multi-Phase Score Updates: Our process (estimate, solve for changed, propagate, refine) ensures that when clusters or entity scores change, we’re not recomputing the world:

- Estimate the initial score.

- Solve scores for changed clusters, assuming the rest are unchanged.

- Iteratively update the list of affected clusters and recalculate for them only.

- Refine the solution with full multiplications until discrepancy is low enough.

Instant Availability & Customization: All scores are immediately available after any update (new blocks, new entities). They can be aggregated, filtered, and queried. We provide incoming/outgoing scores as cashflow shares of different categories, and the general score on top can be customized, allowing nuanced responses to even tiny shares of high-risk categories like Terrorism Financing.

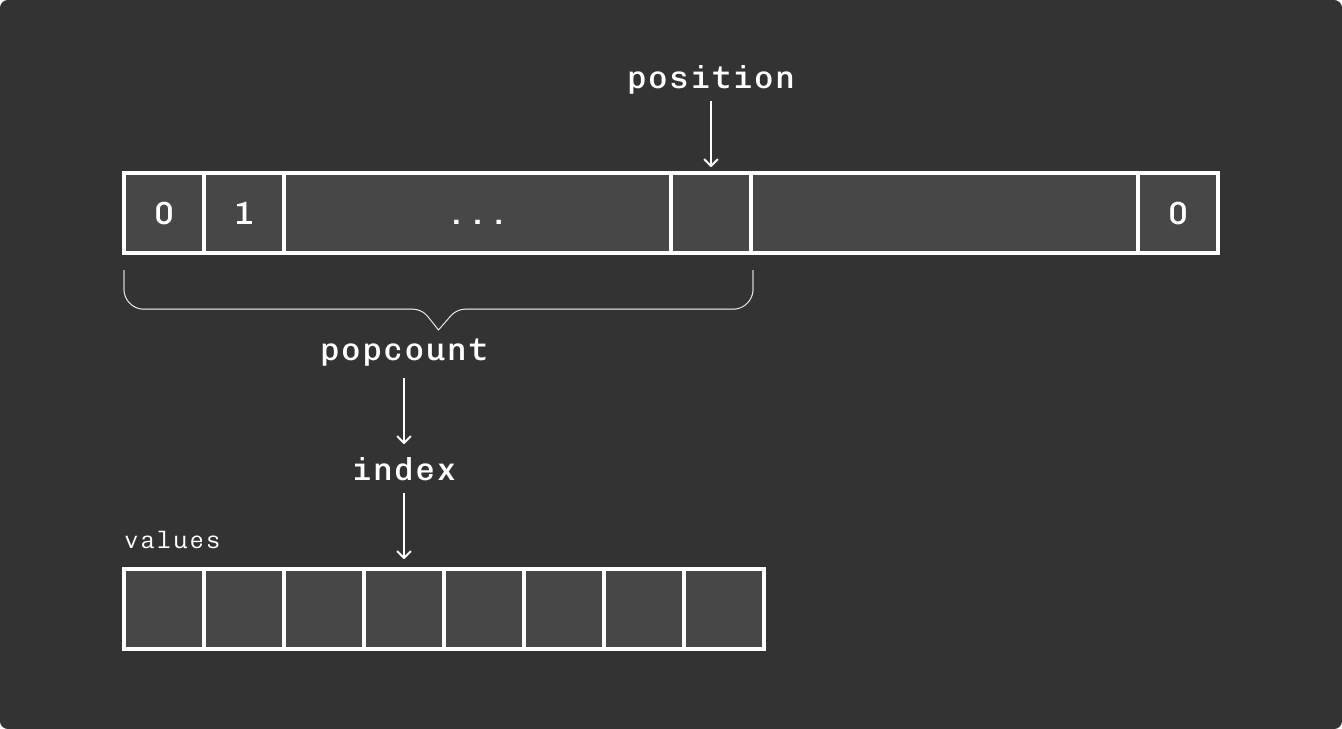

Efficient Score Storage with BitArrayMap: Storing scores for potentially millions of clusters efficiently is paramount. Scores (floats from 0 to 1, packed into 16-bit unsigned integers) are stored in dynamic arrays using BitArrayMap items. A BitArrayMap represents a bit array supporting fast rank/select operations (rank gives the count of set bits before a position; select gives the value from an associated array for a given set bit index). This provides rapid access to index/identifier-value pairs. We use popcount-like builtins for efficient index/position calculations. Score share values are allocated using stack allocators, and memory for both arrays and allocators often uses huge pages for better TLB efficiency, all contributing to speed and memory

5. Caching & Data Traversal: Sub-Millisecond Response Times

To sustain this level of performance, where basic queries like address/cluster statistics are often sub-millisecond (network-bound, not database-bound), CFD employs a sophisticated caching system.

Address Balance Cache: For addresses with significant transaction counts, storing various balances/sent/received amounts for different time intervals, updated on each new transaction.

Entity Cache: Mapping addresses to known entities and vice-versa, including clustering info for efficient updates.

Cluster Statistics Cache: Aggregated statistics for address clusters (balances, transaction arrays for inter-cluster traversal, activity info, USD cashflow between clusters). Updating these based on clustering differences (splits/joins) is a complex but highly optimized task.

Crunching Numbers at Scale with Optimized Iterators: With huge clusters and datasets, filtering, sorting, and aggregating become challenging. We meticulously avoid extensive memory allocations during processing, favoring stack allocators, memory pools, or direct page allocation. Many core operations, like traversing transactions between large clusters or identifying common counterparties, involve complex operations on sorted data ranges. Our custom iterators and algorithms can, for example, prepare k-way merges for parallel processing in k⋅logk(n) time, distributing workloads efficiently across all available cores.

Economic Impact: The Caudena Moat

The technical prowess of CFD translates directly into transformative business advantages. Unlike conventional blockchain analytics platforms dependent on sprawling, high-cost infrastructure and offering limited analytical depth, Caudena’s proprietary in-memory data architecture radically elevates efficiency and capability. Industry experts note that memory-first systems can deliver 10–100× performance improvements – but Caudena’s design goes even further, delivering vastly superior analytic power at 200× to 400× lower infrastructure cost than even these less capable systems.

This multi-hundred-fold leap transforms the economics of blockchain analysis, especially critical as data volumes for chains like Solana (currently 400TB+, heading to 1PB) make legacy solutions prohibitively expensive. What might be a million-dollar data operation offering shallow insights elsewhere turns into something that runs on mere thousands with Caudena, providing deep, real-time, and court-admissible intelligence. By keeping critical data in RAM and optimizing every query for speed via JIT compilation and C++ routines, CFD eliminates I/O bottlenecks and achieves near real-time insights without an army of servers. This isn’t a minor incremental edge – it’s a fundamental technological moat and a business game-changer that allows Caudena to outmaneuver and vastly outperform far larger incumbents.

Stability & Real-World Performance

Speed and depth are nothing without reliability. CFD is built for demanding production environments where failure is not an option.

Rapid Restart & Updates: By default, CFD ingests data from huge compressed JSON files (e.g., a 1TB JSON can be processed in ~1 hour via an asynchronous pipeline) and PostgreSQL/ClickHouse connections. For even faster restarts, full dumps or mini-dumps (without caches, if data format hasn’t changed significantly) can be used. Remarkably, the Bitcoin version of CFD can load from a full dump in an impressive 1 minute, fully utilizing CPU and I/O! Production and staging servers are updated seamlessly, unnoticeable to users accessing via a load balancer.

Measures to Improve Stability:

- Robust Environment Support: Assertions and critical events are logged with stack traces in production. In staging, the application can stop and wait for a debugger, facilitating rapid issue resolution.

- Comprehensive Logging: All assertions, signals, and critical exceptions are logged, including stack traces.

- Forking Option (Safety Net): While never yet needed in production, a forking option exists to isolate potentially unstable user-composed requests, prioritizing core application stability. This reflects our commitment to balancing extreme performance with robust error handling.

The Road Ahead: Caudena’s Vision for Dominance

CFD is the foundation for our market leadership, not the endgame. Our future plans are ambitious:

- Universal Blockchain Support & Sharding for Petabyte Scale: Architecting CFD to conquer the largest, most complex blockchains, introducing intelligent sharding where necessary to ensure future-proof scalability and maintain our cost/performance advantage even as data explodes.

- AI-Driven Smart Contract Deconstruction: Moving beyond simple transaction tracing to achieve deep, semantic understanding of complex DeFi protocols and sophisticated illicit financing schemes by precisely decoding internal contract logic.

- Unified Cross-Chain Intelligence Fabric: Breaking down siloes to provide a holistic view of value flow across the entire crypto-ecosystem, powered by intelligent bridge contract analysis, enabling truly seamless multi-chain analysis that reflects the interconnected reality of digital assets.

The Paradigm Shift in Crypto Intelligence is Here, and Caudena is Leading It

Caudena’s CFD represents more than an analytics engine; it’s the foundational technology for the next generation of financial intelligence and law enforcement in the digital asset space. By fundamentally re-engineering how data is stored, processed, and queried, we’ve unlocked capabilities previously out of reach, solving the core challenges of speed, cost, complexity, and court-admissible data at a level previously unimaginable to all existing alternatives.

We are empowering organizations to navigate the complexities of the digital asset world with unprecedented clarity, speed, and confidence. This isn’t just an improvement on existing tools. It’s the enabling technology for the future of crypto intelligence, setting a new standard that others cannot meet. The era of slow, expensive, shallow, and non-verifiable blockchain analytics is over.