AI agents are a hot topic, but not every AI system needs to be one.

While agents promise autonomy and decision-making power, simpler & more cost-saving solutions better serve many real-world use cases. The key lies in choosing the right architecture for the problem at hand.

In this post, we'll explore recent developments in Large Language Models (LLMs) and discuss key concepts of AI systems.

We've worked with LLMs across projects of varying complexity, from zero-shot prompting to chain-of-thought reasoning, from RAG-based architectures to sophisticated workflows and autonomous agents.

This is an emerging field with evolving terminology. The boundaries between different concepts are still being defined, and classifications remain fluid. As the field progresses, new frameworks and practices emerge to build more reliable AI systems.

To demonstrate these different systems, we'll walk through a familiar use case – a resume-screening application – to reveal the unexpected leaps in capability (and complexity) at each level.

Pure LLM

A pure LLM is essentially a lossy compression of the internet, a snapshot of knowledge from its training data. It excels at tasks involving this stored knowledge: summarizing novels, writing essays about global warming, explaining special relativity to a 5-year-old, or composing haikus.

However, without additional capabilities, an LLM cannot provide real-time information like the current temperature in NYC. This distinguishes pure LLMs from chat applications like ChatGPT, which enhance their core LLM with real-time search and additional tools.

That said, not all enhancements require external context. There are several prompting techniques, including in-context learning and few-shot learning that help LLMs tackle specific problems without the need of context retrieval.

Example:

- To check if a resume is a good fit for a job description, an LLM with one-shot prompting and in-context learning can be utilized to classify it as Passed or Failed.

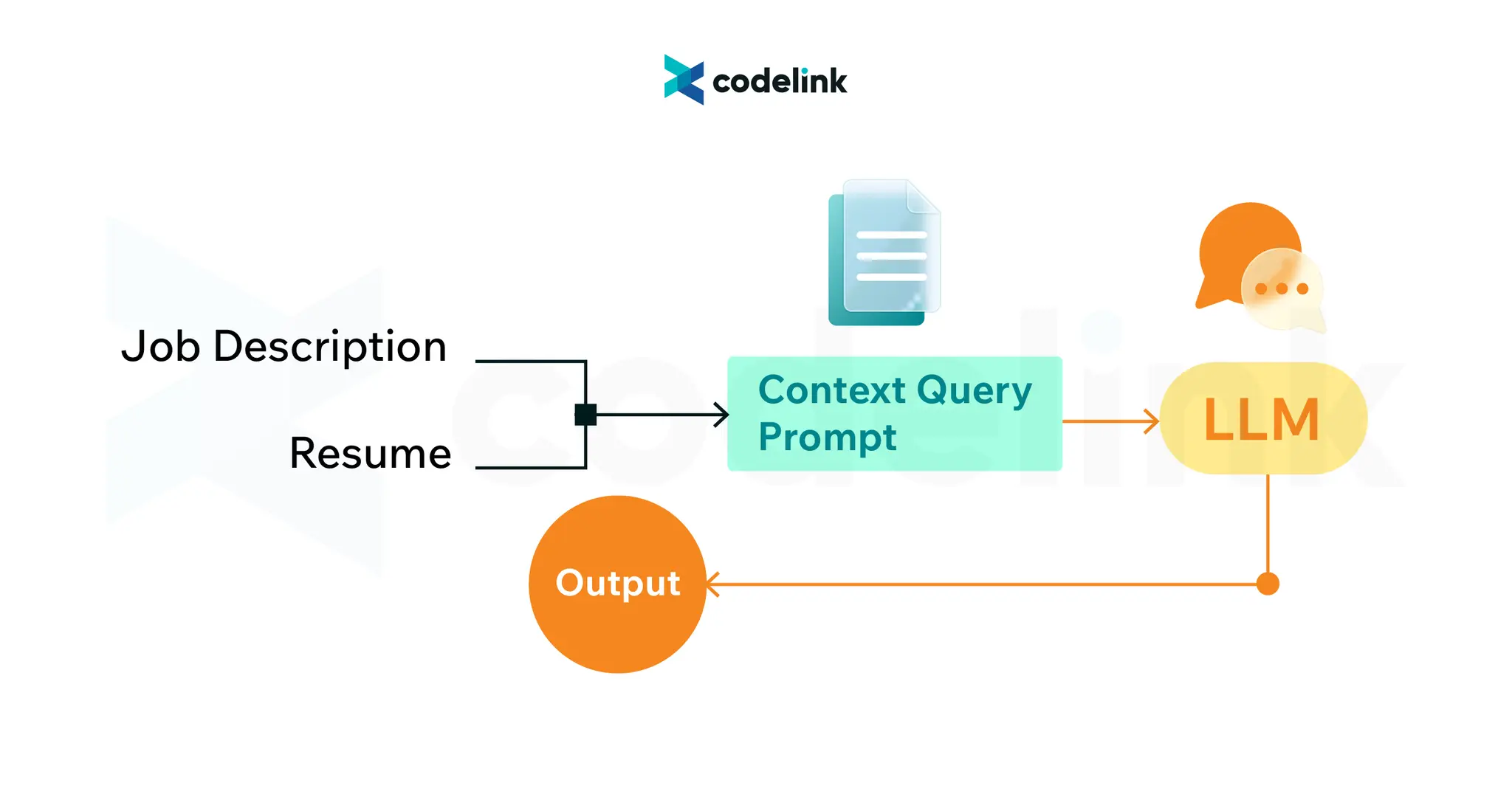

RAG (Retrieval Augmented Generation)

Retrieval methods enhance LLMs by providing relevant context, making them more current, precise, and practical. You can grant LLMs access to internal data for processing and manipulation. This context allows the LLM to extract information, create summaries, and generate responses. RAG can also incorporate real-time information through the latest data retrieval.

Example:

- The resume screening application can be improved by retrieving internal company data, such as engineering playbooks, policies, and past resumes, to enrich the context and make better classification decisions.

- Retrieval typically employs tools like vectorization, vector databases, and semantic search.

Tool Use & AI Workflow

LLMs can automate business processes by following well-defined paths. They're most effective for consistent, well-structured tasks.

Tool use enables workflow automation. By connecting to APIs, whether for calculators, calendars, email services, or search engines, LLMs can leverage reliable external utilities instead of relying on their internal, non-deterministic capabilities.

Example:

- An AI workflow can connect to the hiring portal to fetch resumes and job descriptions → Evaluate qualifications based on experience, education, and skills → Send appropriate email responses (rejection or interview invitation).

- For this resume scanning workflow, the LLM requires access to the database, email API, and calendar API. It follows predefined steps to automate the process programmatically.

AI Agent

AI Agents are systems that reason and make decisions independently. They break down tasks into steps, use external tools as needed, evaluate results, and determine the following actions: whether to store results, request human input, or proceed to the next step.

This represents another layer of abstraction above tool use & AI workflow, automating both planning and decision-making.

While AI workflows require explicit user triggers (like button clicks) and follow programmatically defined paths, AI Agents can initiate workflows independently and determine their sequence and combination dynamically.

Example:

- An AI Agent can manage the entire recruitment process, including parsing CVs, coordinating availability via chat or email, scheduling interviews, and handling schedule changes.

- This comprehensive task requires the LLM to access databases, email and calendar APIs, plus chat and notification systems.

Key takeaway

1. Not every system requires an AI agent

Start with simple, composable patterns and add complexity as needed. For some systems, retrieval alone suffices. In our resume screening example, a straightforward workflow works well when the criteria and actions are clear. Consider an Agent approach only when greater autonomy is needed to reduce human intervention.

2. Focus on reliability over capability

The non-deterministic nature of LLMs makes building dependable systems challenging. While creating proofs of concept is quick, scaling to production often reveals complications. Begin with a sandbox environment, implement consistent testing methods, and establish guardrails for reliability.