Authored by Savannah Fortis via CoinTelegraph.com,

Ethereum co-founder Vitalik Butertin has shared his take on “superintelligent” artificial intelligence, calling it “risky” in response to ongoing leadership changes at OpenAI.

On May 19, Cointelegraph reported that OpenAI’s former head of alignment, Jan Leike, resigned after saying he had reached a “breaking point” with management on the company’s core priorities.

Leike alleged that “safety culture and processes have taken a backseat to shiny products” at OpenAI, with many pointing toward developments around artificial general intelligence (AGI).

AGI is anticipated to be a type of AI equal to or surpassing human cognitive capabilities — the thought of which has already begun to worry industry experts, who say the world isn’t properly equipped to manage such superintelligent AI systems.

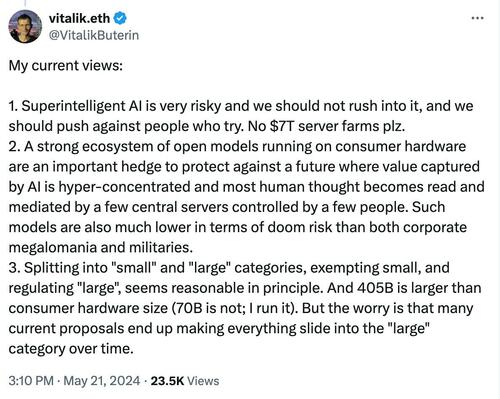

This sentiment seems to align with Buterin’s views. In a post on X, he shared his thoughts on the topic, emphasizing that people should not rush into action or push back against those who try.

Source: Vitalik Buterin

Buterin stressed open models that run on consumer hardware as a “hedge” against a future where a small conglomerate of companies would be able to then read and mediate most human thought.

“Such models are also much lower in terms of doom risk than both corporate megalomania and militaries.”

This is his second comment in the last week on AI and its increasing capabilities.

On May 16, Buterin argued that OpenAI’s GPT-4 model has passed the Turing test, which determines the “humanness” of an AI model. He cited new research that claims most humans can’t determine when they talking to a machine.

However, Buterin is not the first to express this concern. The United Kingdom’s government also recently scrutinized Big Tech's increasing involvement in the AI sector, raising issues related to competition and market dominance.

Groups like 6079 are already emerging across the internet, advocating for decentralized AI to ensure it remains more democratized and not dominated by Big Tech.

Source: 6079

This follows the departure of another senior member of OpenAI’s leadership team on May 14, when Ilya Sutskever, co-founder and chief scientist, announced his resignation.

Sutskever did not mention any concerns about AGI. However, in a post on X, he expressed confidence that OpenAI will develop an AGI that is “safe and beneficial.”