Supabase Storage is now officially an S3-Compatible Storage Provider. This is one of the most-requested features and is available today in public alpha. Resumable Uploads are also transitioning from Beta to Generally Available.

The Supabase Storage Engine is fully open source and is one of the few storage solutions that offer 3 interoperable protocols to manage your files:

We always strive to adopt industry standards at Supabase. Supporting standards makes workloads portable, a key product principle. The S3 API is undoubtedly a storage standard, and we're making it accessible to developers of various experience-levels.

The S3 protocol is backwards compatible with our other APIs. If you are already using Storage via our REST or TUS APIs, today you can use any S3 client to interact with your buckets and files: upload with TUS, serve them with REST, and manage them with the S3 protocol.

The protocol works on the cloud, local development, and self-hosting. Check out the API compatibility in our docs

Authenticating with Supabase S3

To authenticate with Supabase S3 you have 2 options:

-

The standard

access_keyandsecret_keycredentials. You can generate these from the storage settings page. This authentication method is widely compatible with tools supporting the S3 protocol. It is also meant to be used exclusively serverside since it provides full access to your Storage resources.We will add scoped access key credentials in the near future which can have access to specific buckets.

-

User-scoped credentials with RLS. This takes advantage of a well-adopted concept across all Supabase services, Row Level Security. It allows you to interact with the S3 protocol by scoping storage operations to a particular authenticated user or role, respecting your existing RLS policies. This method is made possible by using the Session token header which the S3 protocol supports. You can find more information on how to use the Session token mechanism in the doc.

S3-compatible Integrations

With the support of the S3 protocol, you can now connect Supabase Storage to many 3rd-party tools and services by providing a pair of credentials which can be revoked at any time.

You can use popular tools for backups and migrations, such as:

- AWS CLI: The official AWS CLI

- rclone: a command-line program to manage files on cloud storage.

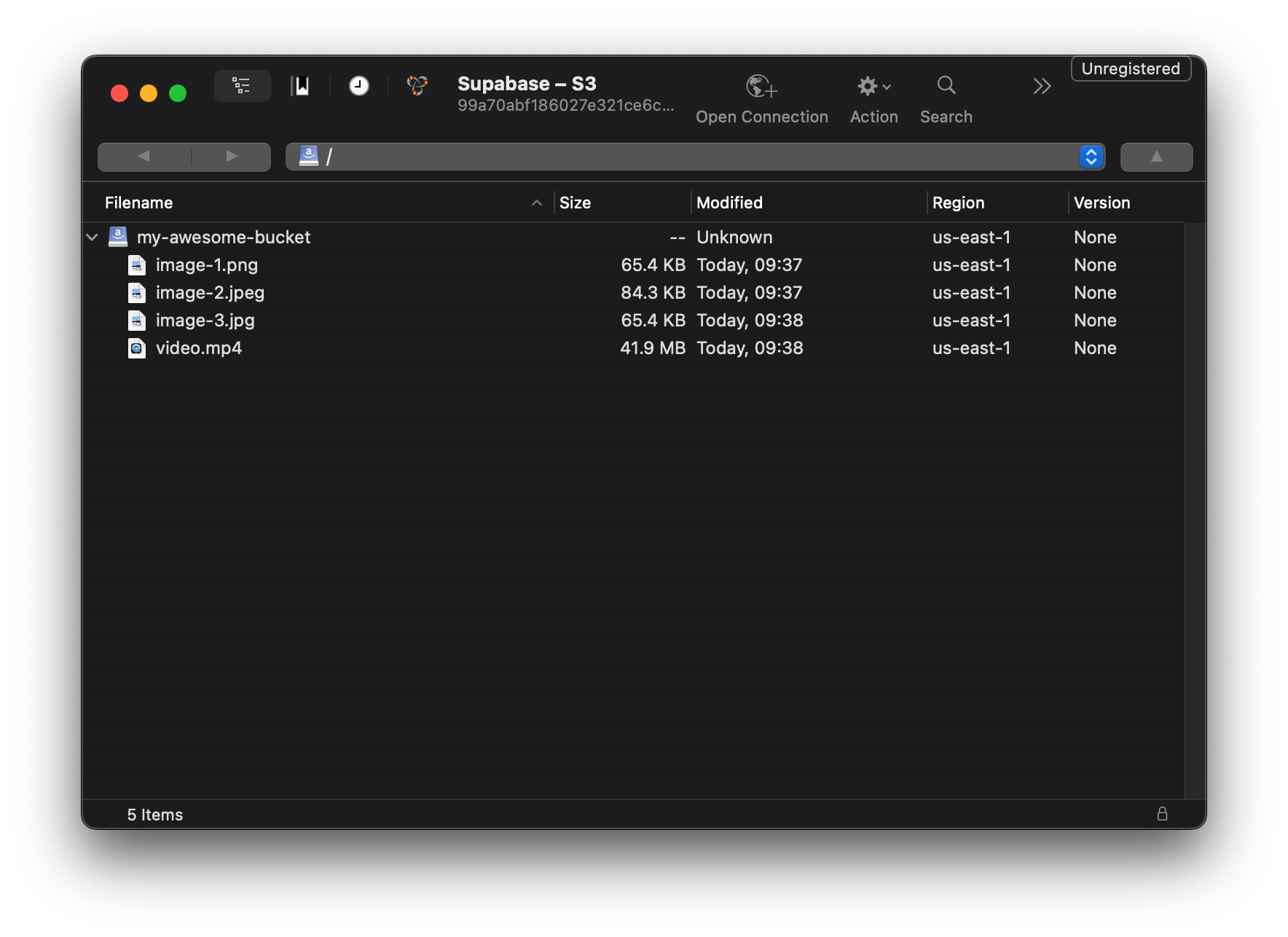

- Cyberduck: a cloud storage browser for Mac and Windows.

- and any other s3-compatible tool ...

Check out our Cyberduck guide here.

S3 for Data Engineers

S3 compatibility provides a nice primitive for Data Engineers. You can use it with many popular tools:

- Data Warehouses like ClickHouse

- Query engines like DuckDB, Spark, Trino, & Snowflake External Table

- Data Loaders like Fivetran & Airbyte

In this example our incredible data analyst, Tyler, demonstrates how to store Parquet files in Supabase Storage and query them directly using DuckDB:

Multipart Uploads in S3

In addition to the standard uploads and resumable uploads, we now support multipart uploads via the S3 protocol. This allows you to maximize upload throughput by uploading chunks in parallel, which are then concatenated at the end.

Along with the platform GA announcement, we are also thrilled to announce that resumable uploads are also generally available.

Resumable uploads are powered by the TUS protocol. The journey to get here was immensely rewarding, working closely with the TUS team. A big shoutout to the maintainers of the TUS protocol, @murderlon and @acconut, for their collaborative approach to open source.

Supabase contributed some advanced features from the Node implementation of TUS Spec including distributed locks, max file size, expiration extension and numerous bug fixes:

These features were essential for Supabase, and since the TUS node server is open source, they are also available for you to use. This is another core principle: wherever possible, we use and support existing tools rather than developing from scratch.

- Cross-bucket transfers: We have added the availability to copy and move objects across buckets, where previously you could do these operations only within the same Supabase bucket.

- Standardized error codes: Error codes have now been standardized across the Storage server and now will be much easier to branch logic on specific errors. You can find the list of error codes here.

- Multi-tenant migrations: We made significant improvements to the running migrations across all our tenants. This has reduced migration errors across the fleet and enables us to run long running migrations in an asynchronous manner. Stay tuned for a separate blog post with more details.

- Decoupled dependencies: Storage is fully decoupled from other Supabase products, which means you can run Storage as a standalone service. Get started with this docker-compose file.